3D Face Reconstruction: Make a Realistic Avatar from a Photo

In this post, I’ll introduce techniques for reconstructing a 3D face from a Photo. Creating an unreal character from a picture is familiar in these days. You may have seen it already at some application such as memoji and AR emoji as shown in below figure.

Of course, these applications are interesting, but this time I want to talk about creating realistic 3D faces. In the future, you may see these techniques when you create your own avatar in a realistic video game series like Elder Scrolls or FIFA.

In fact, 3D face reconstruction is one of the hot topics in computer vision&graphics and it has been studied for a long time. Early methods took a lot of time because it was based on optimization algorithms. Even the quality of the results were academic.

Recently, with the introduction of Deep-learning especially CNN, we can generate the results much faster than before, and the quality is improved. There are many papers, but I would like to present two papers that I personally think these present nice approaches. First paper is “ Joint 3D Face Reconstruction and Dense Alignment with Position Map Regression Network” which is simply called PRNet. The approach provides generating position map form a photo to reconstruct dense 3D facial structure. Second, paper is “Accurate 3D Face Reconstruction with Weakly-Supervised Learning: From Single Image to Image Set”. Also, it is called “Deep3DFaceReconstruction”. The paper introduces a method which extract coefficient from a photo(or multiple photos) and reconstruct 3D face. It works very robust to occlusion and large poses. From now on, we will briefly introduce each paper and compare the results.

PRNet(Y. Feng et al.)

The key idea is 3D shape can be represented as a 2D position map. In other words, 3D dense point cloud is mapped to 2D position, refer to above figure. In particular, we can regress a complex 3D shape(>60,000 vertices) by using a small 2D map(256x256). More attractive thing is that we can directly extract a high quality UV texture map from the UV position map.

UV position map is estimated by PRNet which is a kind of auto-encoder. The size of UV position map is small, so it runs really fast!!. And the loss function is simple and intuitive.

Loss function is defined as weighted MSE with mask(W)

300W-LP is one of proper datasets for the method, since we can generate the corresponding 3D position from 3DMM coefficients. Refer to below link: https://github.com/YadiraF/face3d

However, since the number of pairs is quite small, authors tried to overcome the problem by using data augmentation.

Deep3DFaceReconstruction(Yu Deng et al.)

For generating 3D face, this approach uses coefficient which are corresponding vectors for PCA bases. If we extract proper coefficients, we can easily generate 3D shape and texture map. So it does not reconstruct directly, unlike PRNet. We can estimate coefficients using R-Net whose structure is similar to a classifier.

It is interesting to use just input images for training R-Net. The approach is called weak supervision because the model is trained without ground truth. Defiantly it is really useful since gathering data is such a challenge job. Authors introduce 3 loss functions: skin-aware photometric loss, landmark loss and deep identify feature loss.

- Skin-aware photometric loss

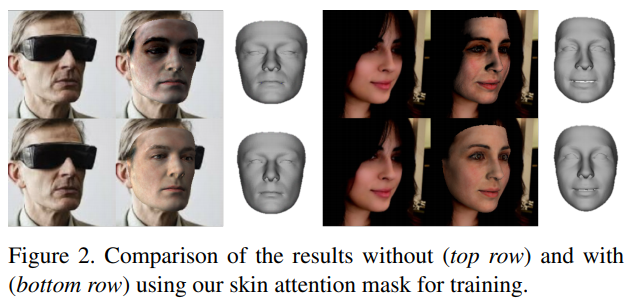

L-2 norm between input(I) and output(I`) is really intuitive loss function. And they introduced skin color based attention mask as shown in above figure A. So the model can focus on face area rather than hair and clothes refer to below figure.

- Landmark loss

We can detect the landmarks from Images and 3D shapes using state of the art 3D face alignment methods. If 3D face alignment methods ensure the accuracy, it would be a proper loss to measure distance between landmark(q) of input image and landmark(q`) of output shape - Deep identify feature loss

The paper introduced loss at the feature level as well as at the image level. It is hard to imagine measuring distance between feature maps. But it helps to get good results refer to below figure.

The approach uses PCA based technique to perform a 3D reconstruct. So it shows great robustness. But the results are bounded on the 3D models which were used to extract PCA bases.

Comparison

I generated some 3D faces form input photos. I choose some images from FFHQ dataset which has never be used as training dataset of both methods. So the samples are reasonable input photos for testing.

- General Portrait

Both approaches generate beautiful results. Results of PRNet looks much more realistic, because the method provide high quality texture map which extract from an input photo directly. But sometime it is a cause of unexpected artifact especially on the invisible part of that like as side of her chin. Deep3DFace makes smoother results than PRNet. But it is really robust no large pose, so you can hard to find artifacts.

- Kids

Both approaches can make good results for kids. We can easily guess the dataset include 3D shape for kids. For the texture map, PRNet well represents color of pupil, eyebrow and lip. And you can notice that Deep3DFace cannot generate teeth because the reconstruction is based on the 3DMM method. But Deep3DFace provide high resolution 3D shape, refer to details on philtrum.

- Old Man

Deep3DFace failed to reconstruct 3D shape from elders. It seems to be made from a photo when he was young. We can notice that it is a limitation of 3DMM method. Elders are unbounded the method. On the other hand PRNet shows features of elders likes white beard and wrinkles. But the method captured glasses as texture of skin. Glasses are attached on the skin. It Looks weird.

- Makeup

PRNet shows attributes of make-up based on high quality texture map. Deep3DFace failed to show for unbounded colors of skin. But the method can represent a detailed expression to a great extend. Look at her mouth up slightly!!

- Too Much Makeup(Cosplay)

Did you notice the problem of PRNet? It is that the method extract unnecessary parts on face such as hair and glasses. It makes results more naturally on same pose, but if you change camera position, it would become artifacts. Since it attached on the skin like as tattoo. On the other hand Deep3DFace sometime failed to represent attribute of input photo, but it does not make unexpected artifacts. All results of Deep3DFace must be bounded on the 3DMM technique. Both methods have pros and cons.

- Bad Lightning Condition(Shadow)

In the case of PRNet, the shadow and highlight are also extracted. We have to remove that, if we want to use the result for other applications. In the case of Deep3DFace, definitely proper teeth should be attached on the 3D shape.

- Profile

In the case of profile, both method did not make a good result. In particular, PRNet could not extract textures from the invisible parts. You can see that the texture of the nose overlaps twice. If we turn the head more, we would see mouth ans eye overlaps several. I did not upload the image, because it’s terrible.

Conclusion

In above results, we saw that the quality of the results depends on conditions of input photo such as poses, ages, occlusions, lights and make-up. There are some conditions that work well for each method, but for getting good results you should use good input photos.

- Frontal portrait

- Reveal forehead, bind the hair

- Take off glasses

- Slight smile

- Light makeup

- Uniform illumination